Published 2025-07-08

Keywords

- Pose Estimation,

- Behavior Classifier,

- ResNet-50,

- Trajectrory Analysis,

- Random Forest

- Decision Tree ...More

How to Cite

Copyright (c) 2025 Romesa Rao, Salman Qadri, Rao Kashif

This work is licensed under a Creative Commons Attribution 4.0 International License.

Abstract

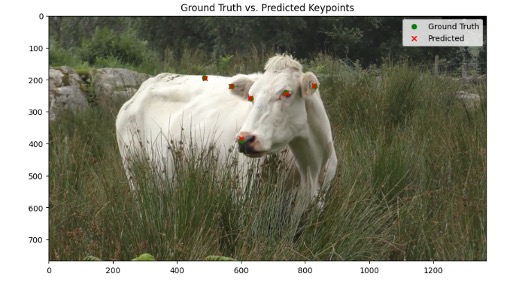

Accurate monitoring of animal health and behavior is crucial for improving welfare and productivity in livestock management. Traditional observation methods are time-consuming and prone to subjective bias. To address these challenges, we propose an automated system for behavioral pattern using deep learning-based pose estimation techniques. Specifically, we utilize ResNet-50, a deep convolutional neural network, to detect key anatomical landmarks such as the nose, eyes, ears, and body center. By tracking these keypoints, we generate movement trajectories that help identify behavioral patterns. For behavior classification, we initially applied a decision tree algorithm, achieving an accuracy of 60%. To enhance performance, we implemented a random forest classifier, which significantly improved the accuracy to 96%. The system tries to classify seven key behaviors: "stand," "sit," "eat," "drink," "aggressive," "sit with legs tied," and "let go of the tail." The random forest model achieved the highest accuracy in detecting "standing" and "aggressive" behaviors, while lower accuracy was observed for "eating" behavior. Additionally, our pose estimation model demonstrated high precision and recall metrics, indicating robust performance in keypoint detection with minimal deviation from ground truth annotations. This automated system reduces the need for manual observation and provides a reliable tool for monitoring some animal behavior. The potential applications extend to various domains, including animal studies and livestock management, offering a scalable and user-friendly solution for real-time behavior analysis.

References

- Avanzato, R., Beritelli, F., & Puglisi, V. F. (2022, November). Dairy cow behavior recognition using computer vision techniques and CNN networks. In 2022 IEEE International Conference on Internet of Things and Intelligence Systems (IoTaIS) (pp. 122-128). IEEE. DOI: https://doi.org/10.1109/IoTaIS56727.2022.9975979

- Chen, C., Zhu, W., & Norton, T. (2021). Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning. Computers and Electronics in Agriculture, 187, 106255. DOI: https://doi.org/10.1016/j.compag.2021.106255

- Fujimori, S., Ishikawa, T., & Watanabe, H. (2020, October). Animal behavior classification using DeepLabCut. In 2020 IEEE 9th Global Conference on Consumer Electronics (GCCE) (pp. 254-257). IEEE. DOI: https://doi.org/10.1109/GCCE50665.2020.9291715

- Gong, C., Zhang, Y., Wei, Y., Du, X., Su, L., & Weng, Z. (2022). Multicow pose estimation based on keypoint extraction. PloS one, 17(6), e0269259. DOI: https://doi.org/10.1371/journal.pone.0269259

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press. Chapter 7: Regularization for Deep Learning.

- Guzhva, O. (2018). Computer vision algorithms as a modern tool for behavioural analysis in dairy cattle (No. 2018: 33).

- Kashiha, M., Bahr, C., Ott, S., Moons, C. P., Niewold, T. A., Ödberg, F. O., & Berckmans, D. (2013). Automatic identification of marked pigs in a pen using image pattern recognition. Computers and electronics in agriculture, 93, 111-120. DOI: https://doi.org/10.1016/j.compag.2013.01.013

- Kosourikhina, V., Kavanagh, D., Richardson, M. J., & Kaplan, D. M. (2022). Validation of deep learning-based markerless 3D pose estimation. Plos one, 17(10), e0276258. DOI: https://doi.org/10.1371/journal.pone.0276258

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (pp. 1097-1105).

- Labuguen, R., Bardeloza, D. K., Negrete, S. B., Matsumoto, J., Inoue, K., & Shibata, T. (2019, May). Primate markerless pose estimation and movement analysis using DeepLabCut. In 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd international conference on imaging, vision & pattern recognition (icIVPR) (pp. 297-300). IEEE. DOI: https://doi.org/10.1109/ICIEV.2019.8858533

- Li, J., Kang, F., Zhang, Y., Liu, Y., & Yu, X. (2023). Research on Tracking and Identification of Typical Protective Behavior of Cows Based on DeepLabCut. Applied Sciences, 13(2), 1141. DOI: https://doi.org/10.3390/app13021141

- Li, J., Wu, P., Kang, F., Zhang, L., & Xuan, C. (2018). Study on the Detection of Dairy Cows’ Self‐Protective Behaviors Based on Vision Analysis. Advances in Multimedia, 2018(1), 9106836. DOI: https://doi.org/10.1155/2018/9106836

- Mathis, A., Mamidanna, P., Cury, K. M., Abe, T., Murthy, V. N., Mathis, M. W., & Bethge, M. (2018). DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature neuroscience, 21(9), 1281-1289. DOI: https://doi.org/10.1038/s41593-018-0209-y

- Nath, T., Mathis, A., Chen, A. C., Patel, A., Bethge, M., & Mathis, M. W. (2019). Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nature protocols, 14(7), 2152-2176. DOI: https://doi.org/10.1038/s41596-019-0176-0

- Pereira, T. D., Tabris, N., Matsliah, A., Turner, D. M., Li, J., Ravindranath, S., ... & Murthy, M. (2022). SLEAP: A deep learning system for multi-animal pose tracking. Nature methods, 19(4), 486-495. DOI: https://doi.org/10.1038/s41592-022-01426-1

- Perez, M., & Toler-Franklin, C. (2023). CNN-based action recognition and pose estimation for classifying animal behavior from videos: A survey. arXiv preprint arXiv:2301.06187.

- Sakata, S. (2023). SaLSa: a combinatory approach of semi-automatic labeling and long short-term memory to classify behavioral syllables. Eneuro, 10(12). DOI: https://doi.org/10.1523/ENEURO.0201-23.2023

- Shorten, C., & Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. Journal of Big Data, 6(1), 1-48 DOI: https://doi.org/10.1186/s40537-019-0197-0

- Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556.

- Tien, R. N., Tekriwal, A., Calame, D. J., Platt, J. P., Baker, S., Seeberger, L. C., ... & Kramer, D. R. (2022). Deep learning based markerless motion tracking as a clinical tool for movement disorders: Utility, feasibility and early experience. Frontiers in Signal Processing, 2, 884384. DOI: https://doi.org/10.3389/frsip.2022.884384

- Wang, J., He, Z., Zheng, G., Gao, S., & Zhao, K. (2018). Development and validation of an ensemble classifier for real-time recognition of cow behavior patterns from accelerometer data and location data. PloS one, 13(9), e0203546. DOI: https://doi.org/10.1371/journal.pone.0203546

- Wiltshire, C., Lewis‐Cheetham, J., Komedová, V., Matsuzawa, T., Graham, K. E., & Hobaiter, C. (2023). DeepWild: Application of the pose estimation tool DeepLabCut for behaviour tracking in wild chimpanzees and bonobos. Journal of Animal Ecology, 92(8), 1560-1574. DOI: https://doi.org/10.1111/1365-2656.13932

- Wu, D., Wang, Y., Han, M., Song, L., Shang, Y., Zhang, X., & Song, H. (2021). Using a CNN-LSTM for basic behaviors detection of a single dairy cow in a complex environment. Computers and Electronics in Agriculture, 182, 106016. DOI: https://doi.org/10.1016/j.compag.2021.106016